In this blog, I wanted to go on a tour down memory lane with the collaboration aspect of good ol'

A video call between two devices has several media channels:

- An audio channel

- A video channel

- A content channel for collaboratively working on documents, files, images, desktops, etc.

At its core, data collaboration is the ability of a conference participant to present content to other participants (even as a static image). More advanced protocols and features allow participants to act on content (such as annotating, direct editing, etc.).

Back in my day...

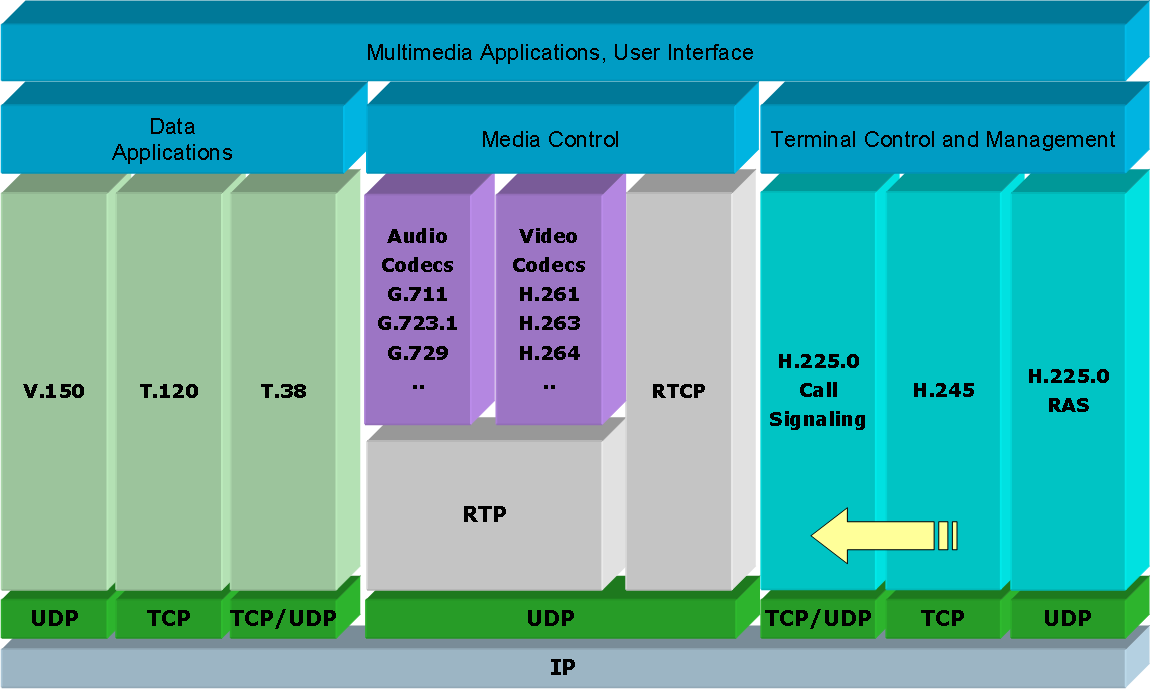

To get a feel for where this content channel sits in the stack, take a look at the following (borrowed from this wikipedia article):

I like how this figure breaks out the channels. You can see that control channels (H.225, H.245, RAS) are separate channels from the various media channels. Audio and video are passed via RTP using UDP as a transport and the T.120 spec (grandfather of more modern content sharing methods?) is a self-contained mechanism running over TCP.

When I started working with video conferencing solutions each major player (PictureTel, Polycom, and Tandberg) had their proprietary methods for providing a dedicated content channel but the standard (for interop) was good ol' T.120.

Like H.320 and H.323, T.120 is an "umbrella" standard developed by the ITU-T during the early to mid-1990s. As an umbrella standard, T.120 has several sub-components which combine to create a self-sufficient set of collaboration tools. At the time, T.120 was developed for H.320 (ISDN) networks but was also available to H.323 endpoints as well. The key members of the T-spec family are shown hanging out together in the following figure.

The T.120 spec was implemented in several products at the time. I was even surprised to find out that the WebEx MediaTone network leveraged T.120, though it is hard to nail down how prolific that was. It probably doesn't matter because when I started tinkering with this stuff each vendor was rockin' (or developing, or acquiring - in the case of Polycom and PictureTel) there own method for content sharing.

Enter H.239

The H.239 recommendation is another spawn from the ITU-T that builds on H.320, H.323, and H.245 standards. The official title of this recommendation says it all: "Role Management and Additional Media Channels for H.3xx-series Terminals". Wow, what a mouth full. What's it mean? Basically, it is a spec that H.32x endpoints can use to spin up additional media channels to share content outside of the audio and video channel AND a way to manage content sharing. An analogy for all of you WebEx users: "pass me the ball".

If H.239 was a bouncing baby boy, its parents would be Polycom and Tandberg. Somewhere around the year 2000 (known as the end of days to COBOL programmers the world over) Tandberg introduced their DuoVideo feature. Right around the same time, PictureTel introduced their "People+Content" feature. PictureTel was acquired by Polycom who commemorated the event by printing T-shirts that boldly stated: "People+Content==(DuoVideo2)". OK, I am kidding about the T-shirt part, but it would have been a top seller for sure.

Anyway, the point is the H.239 recommendation was based on technologies from Tandberg (now Cisco) and Polycom.

Putting the nostalgia on hold for the moment, let's talk about what H.239 is bringing to the table. As video codecs evolved and network bandwidth became more available, the viability of using a secondary video channel in a videoconference increased. This "dual video" capability allows two or more endpoints to stand up one "main" (or "live") video stream, typically used for delivery of the "talking heads" and a second "presentation" (or "content") video stream which can be used for sending pre-recorded content, desktop sharing, document sharing, etc.

Creating the ability to stand up multiple media streams is only one aspect of the recommendation. There are several "control" problems that need to be addressed:

If H.239 was a bouncing baby boy, its parents would be Polycom and Tandberg. Somewhere around the year 2000 (known as the end of days to COBOL programmers the world over) Tandberg introduced their DuoVideo feature. Right around the same time, PictureTel introduced their "People+Content" feature. PictureTel was acquired by Polycom who commemorated the event by printing T-shirts that boldly stated: "People+Content==(DuoVideo2)". OK, I am kidding about the T-shirt part, but it would have been a top seller for sure.

Anyway, the point is the H.239 recommendation was based on technologies from Tandberg (now Cisco) and Polycom.

Putting the nostalgia on hold for the moment, let's talk about what H.239 is bringing to the table. As video codecs evolved and network bandwidth became more available, the viability of using a secondary video channel in a videoconference increased. This "dual video" capability allows two or more endpoints to stand up one "main" (or "live") video stream, typically used for delivery of the "talking heads" and a second "presentation" (or "content") video stream which can be used for sending pre-recorded content, desktop sharing, document sharing, etc.

Creating the ability to stand up multiple media streams is only one aspect of the recommendation. There are several "control" problems that need to be addressed:

- Channel Association: There needs to be a mechanism to associate the type of content being delivered by each of the video streams. IOW, the receiving system needs to know which stream is "live" and which is "presentation".

- Chair Control: There needs to be a mechanism for allowing endpoints to negotiate and delegate control of the presentation channel. IOW, there needs to be ways to identify who controls "the ball" and a method to pass "the ball" between participants.

Right around the same time that the H.239 recommendation was released the IETF established a working group called "Centralized Conferencing (xcon)". There were several planned deliverables for this working group that collectively provided the building blocks for establishing and controlling multiple media channels with Session Description Protocol (SDP).

Note: SDP is a separate standard from SIP. SDP is more or less analogous to H.245 in the ITU-T H.323 recommendation.Let's start with RFC 4582 "Binary Floor Control Protocol (BFCP)" because that is what people associate as the H.239 equivalent feature in SIP communications. BFCP basically defines a method for coordinating access and control of shared resources (a "presentation" video stream, for example) in a conference. The main concept is "Floor Control", which is a mechanism that enables endpoints to negotiate and delegate (mutually exclusive or not) input access to a shared resource. It is worth noting that BFCP is providing a mechanism to negotiate control of a resource, this is completely independent of the actual video streams, so a separate mechanism is needed to associate the media channel and the resource controlled by BFCP in a given conference.

Entering from stage right: RFC 4574 "The Session Description Protocol (SDP) Label Attribute". This RFC defines a new attribute for SDP called a "label". This attribute allows the sender to attach a "label" to individual SDP media streams. Which then can be referenced by other mechanisms (i.e. BFCP).

The last piece of the puzzle is RFC 4796 "The Session Description Protocol (SDP) Content Attribute". This RFC defines another SDP attribute called "content". This attribute provides a method for the sender to refine the description of the content of a specific media channel. Thus allowing a receiver to handle the media channel appropriately, like display the primary channel full screen and the content channel in a small window. This is equivalent to how H.239 associates a role to the primary and content or presentation channel.

The challenge with SIP solution is that it has the necessary building blocks but the "glue" that ties all of this together is left up to equipment manufacturers. So, the bottom line is if you are deploying SIP/BFCP in a heterogeneous environment make sure you are doing proper interop testing. In contrast, support for H.239 is more ubiquitous with modern endpoints, MCUs, etc.

Conclusion

The ability to provide a mechanism for sharing content in a video conference should be considered a must have requirement if you want to take full advantage of a video conferencing (or telepresence for the Cisco folks) solution. Being able to share your desktop, annotate a document/whiteboard, chat out of band, or share other content in-line with your video conference is what collaboration is all about. Without it, you are just a bunch of talking heads and who needs that? Of course, I know there are other solutions that can be spliced together to create a collaborative workflow (like running a web conference in parallel to your video conference) but that is probably a separate topic for a different day.

Thanks for reading. If you have time, post a comment!

This is great!!

ReplyDeleteBTW: Everyone can record and playback a video, but how do you record or playback dual media as BFCP and H.239

/ping